September 11, 2023

How fast can Tari be?

How to scale a PoW system to 4,000 TPS

Ultimately, the success of Tari will be driven by the dApplications that are built on top of it, and not based on the underlying technology. But without a strong foundation of scalability, security, decentralisation, privacy, and amazing developer tools, those dApplications will never be built.

In this post, I share some ideas on how Tari is tackling the scalability portion of that challenge.

Side-stepping the blockchain trilemma

From RFC-0001:

“Speed, security and decentralisation typically form a trilemma that means that you need to settle for two out of the three.

“Tari attempts to resolve the dilemma by splitting operations over two discrete layers. Underpinning everything is a base layer that focuses on security and manages global state, and a second, digital assets layer that focuses on rapid finalisation and scalability.

“The distributed system trilemma tells us that these requirements are mutually exclusive.

“We can’t have fast, cheap digital assets and also highly secure and decentralized currency tokens on a single system.

“Tari overcomes this constraint by building two layers:

- A base layer that provides a public ledger of Tari coin transactions, secured by PoW to maximize security.

- A DAN consisting of a highly scalable, efficient side-chain that each manages the state of all digital assets.”

Base-layer transactions

The base layer is designed to be secure and decentralised. It is secured by proof-of-work, and all transactions are confidential by virtue of the Mimblewimble protocol.

The testnet parameters are set so that a block is mined every 120s on average.

The maximum number of transactions per block depends on the number of outputs – outputs are very large in Tari because of the need to provide a 580B range proof for each one – but assuming the standard 1 input, 2 output transaction, a single block can hold around 1,000 transactions. Mainnet will have a similar, if not identical, setup.

Which gives the base layer a capacity of around 8.3 transactions per second.

Oh noes! So slow. Them’s Bitcoin numbers!

Maybe, but when Tari is “at equilibrium”, the vast majority of activity will zip around on the digital assets layer. The base layer will only be used for:

- Validator node registrations

- Tari minting

- Smart contract template registrations

- Minotari transfers

None of these are expected to be high-volume activities in the long run, and so we expect the base layer to maintain sufficient capacity for these transactions whilst permitting a highly decentralised, secure, and censorship-resistant base layer.

In the event of unexpected elevated usage of the base layer (TRC-20s anyone?), a migration to a Monero-esque dynamic blocksize algorithm is not off the table for a future network upgrade.

Tari Digital Asset network capacity

The Tari digital assets network is being built using Cerberus. Cerberus is a high-throughput BFT algorithm that has the unique property of dividing the space of all possible smart contract states into predefined “addresses”. All addresses are pre-assigned to validators, so that the network is effectively self-sharding. The algorithm for sharding the state space has a few network-wide tuning parameters, such as “how many nodes should be assigned to each shard”.

We don’t know the “right number” for this yet, but gut feel says somewhere between 25 (allowing 8 byzantine nodes per shard) and 100 (allowing 33 byzantine nodes per shard) is the right range.

In Cerberus, only shards that are involved in a transaction need to reach consensus on the transaction. Testnet experience will confirm this, but we expect most transactions to involve two or three shards.

To reach consensus, the involved shards form an ad-hoc Hotstuff committee and reach finality in four rounds of communication. These communication rounds establish the following:

- Only the correct nodes are participating in consensus,

- The input state is valid and certified,

- The output state is valid,

- A certificate for the output has been created, and certified,

- Fees are apportioned and allocated to the appropriate nodes.

When a node is executing a transaction, some of the potentially heavy time-consuming tasks are:

- Identifying the smart contracts that are referenced in the instruction and making sure a copy of the contract code is available locally,

- Firing up a TariVM instance and loading the contract code,

- Obtaining a valid copy of the transaction’s input,

- Executing the contract code on the input.

At the current state of pre-alpha development state of the Tari network, the time from a client wallet firing off a request and receiving a finalised answer is around 2-5 seconds. This is pretty good news, because there are many optimisations we can explore on every single one of the bullet points above, including:

- Reducing HotStuff communications to three or, recent research suggests, even two rounds, without sacrificing liveness or safety.

- Optimising the TariVM caching algorithm to keep popular contracts in a hot cache.

- Preempting requests and caching input state from other shards.

- Pre-compiling contracts to TariVM instances.

- Batching of instructions to amortise consensus rounds across multiple transactions.

Ultimately we believe that we can get end-to-end finalisation of Tari instructions to under 1,000ms.

So, the goal is to achieve a latency experienced by clients on the network to 1,000ms or less.

As far as an individual node goes, they will not be sitting around idly waiting for network messages to arrive before moving onto the next instruction. Nodes can perform chained Hotstuff which allows them to move onto the next set of instructions while waiting to hear back on consensus results for previous sets of instructions.

Ultimately this makes Tari nodes CPU-bound in smart contract execution rather than network-bound.

Now, smart-contract execution speed is obviously highly variable, and I’m reaching deep into speculative territory here, but I can imagine that the majority of smart contract calls will be non-CPU intensive DeFi and NFT contract calls that can be processed in 10ms - 100ms.

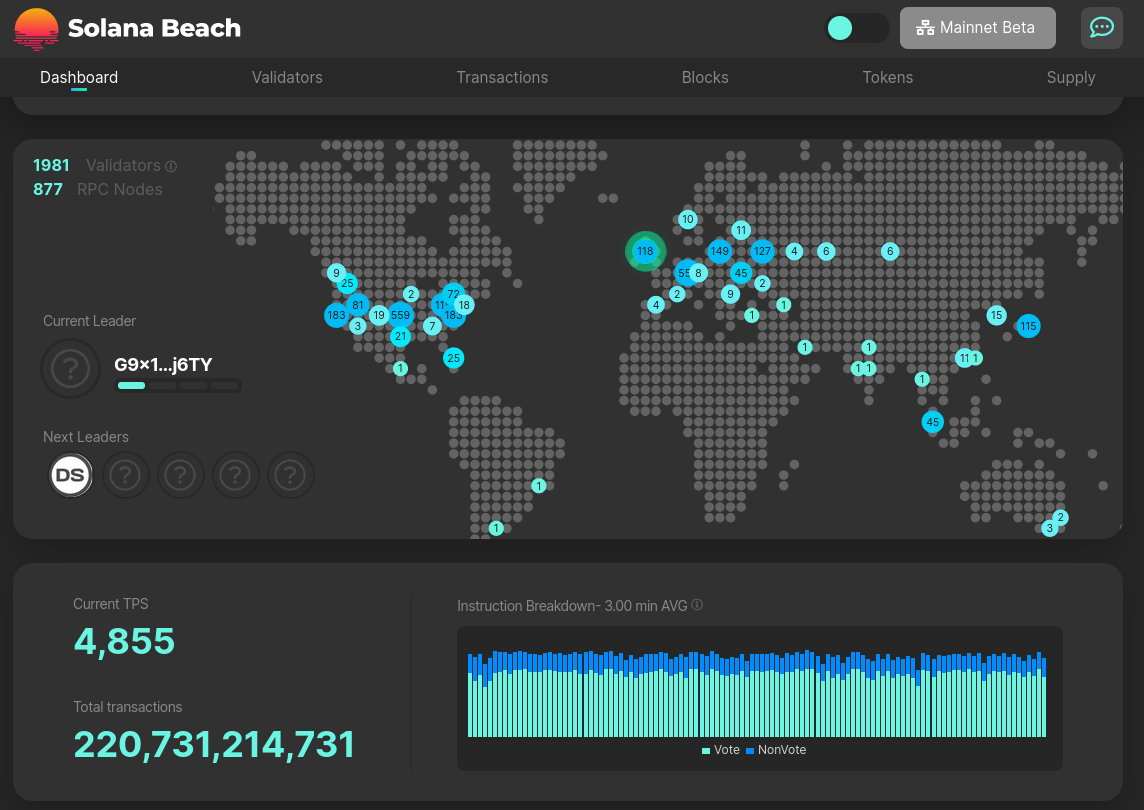

With chained Hotstuff, a single node might be able to process 10 - 100 instructions per second. Again, this is a big ballpark guess, so don’t hold me to it. Let’s say 20 transactions per second on a sustained basis. That still doesn’t sound very fast, and it looks like Solana has disappeared into the distance with its claimed 50,000 TPS; though Solana’s real-world performance is closer to 1,400 dApp-related (non-voting) transactions per second. MEV strategies aside, the existence of an emerging fee market suggests that there isn’t that much spare capacity on the network as one might think.

Scaling the Tari network

Let’s assume that we configure the Tari network so that there are 25 nodes per shard. If the network is tiny and has only 25 nodes, there will only be a single shard, and so our proto-Cerberus network will run like Aptos or Sui. Every node must reach consensus on every transaction. Since each node running at full-tilt can handle 20 transactions per second, that would be the network capacity.

Now let’s assume that the network grows to 100 nodes. The network will split into four shards, each with 25 nodes. Assuming the vast majority of transactions involve two shards, the network is effectively running two “cores” and overall capacity is now 40 transactions per second.

In a scenario of “full adoption”, there might be 10,000 nodes on the network. This would result in 400 shards, each with 25 nodes. This is equivalent to 200 “cores”, and the overall capacity is now 4,000 transactions per second, all the while maintaining sub-second finalisation.

Closing thoughts

Can Tari get to 10,000 or even more nodes? Can Tari get to 10,000 or even more nodes? There are currently between 5500 and 12000 Ethereum nodes running, depending on who you ask. Each of these must validate every transaction and maintain a copy of the entire state of the network. This places a huge burden on a node operator. Solana, Aptos, and all the other Layer One smart contract networks have the same problem.

As the Tari network grows and shards become smaller, each validator node will be required to hold less of the overall system’s state. Running a Tari node will always require roughly the same level of hardware (excluding storage) and should be somewhat economical to run in perpetuity. It’s not unreasonable to imagine that the Tari network will grow to an order of magnitude larger than any Layer One in terms of the number of nodes.

The incentives are designed so that the system is self-regulating in terms of capacity and is self-scaling: If nodes start to earn outsized rewards because congestion pushes fees higher, more nodes are incentivised to join the network. Barriers to entry are somewhat low (the hardware requirements are expected to remain modest), and so we expect the response to the demand to be fairly rapid. More nodes join, increasing the throughput capacity of the network, and reducing the strain on any individual node.

Disclaimer: This article makes use of the future tense. This should not be construed as a commitment or guarantee of future performance. The text makes it clear that the estimates given in the scenarios are speculative and unexpected difficulties or bottlenecks may arise that prevent any or all of these scenarios from being achieved.